SYNOPSIS

Create initial cluster config with current node as master.

Remove a node from the cluster.

- <cid>: <integer> (1 - N)

-

Cluster Node ID.

Get help about specified command.

- --extra-args <array>

-

Shows help for a specific command

- --verbose <boolean>

-

Verbose output format.

Join a new node to an existing cluster.

- <master_ip>: <string>

-

IP address.

- --fingerprint ^(:?[A-Z0-9][A-Z0-9]:){31}[A-Z0-9][A-Z0-9]$

-

SSL certificate fingerprint.

Prints the command for joining an new node to the cluster. You need to execute the command on the new node.

An alias for pmgcm join-cmd.

Promote current node to become the new master.

Cluster node status.

- --list_single_node <boolean> (default = 0)

-

List local node if there is no cluster defined. Please note that RSA keys and fingerprint are not valid in that case.

Synchronize cluster configuration.

- --master_ip <string>

-

Optional IP address for master node.

Notify master to refresh all certificate fingerprints

DESCRIPTION

We are living in a world where email is becoming more and more important - failures in email systems are not acceptable. To meet these requirements, we developed the Proxmox HA (High Availability) Cluster.

The Proxmox Mail Gateway HA Cluster consists of a master node and several slave nodes (minimum one slave node). Configuration is done on the master, and data is synchronized to all cluster nodes via a VPN tunnel. This provides the following advantages:

-

centralized configuration management

-

fully redundant data storage

-

high availability

-

high performance

We use a unique application level clustering scheme, which provides extremely good performance. Special considerations were taken to make management as easy as possible. A complete cluster setup is done within minutes, and nodes automatically reintegrate after temporary failures, without any operator interaction.

Hardware Requirements

There are no special hardware requirements, although it is highly recommended to use fast and reliable server hardware, with redundant disks on all cluster nodes (Hardware RAID with BBU and write cache enabled).

The HA Cluster can also run in virtualized environments.

Subscriptions

Each node in a cluster has its own subscription. If you want support for a cluster, each cluster node needs to have a valid subscription. All nodes must have the same subscription level.

Load Balancing

It is usually advisable to distribute mail traffic among all cluster nodes. Please note that this is not always required, because it is also reasonable to use only one node to handle SMTP traffic. The second node can then be used as a quarantine host, that only provides the web interface to the user quarantine.

The normal mail delivery process looks up DNS Mail Exchange (MX) records to determine the destination host. An MX record tells the sending system where to deliver mail for a certain domain. It is also possible to have several MX records for a single domain, each of which can have different priorities. For example, our MX record looks like this:

# dig -t mx proxmox.com ;; ANSWER SECTION: proxmox.com. 22879 IN MX 10 mail.proxmox.com. ;; ADDITIONAL SECTION: mail.proxmox.com. 22879 IN A 213.129.239.114

Notice that there is a single MX record for the domain proxmox.com, pointing to mail.proxmox.com. The dig command automatically outputs the corresponding address record, if it exists. In our case it points to 213.129.239.114. The priority of our MX record is set to 10 (preferred default value).

Hot standby with backup MX records

Many people do not want to install two redundant mail proxies. Instead they use the mail proxy of their ISP as a fallback. This can be done by adding an additional MX record with a lower priority (higher number). Continuing from the example above, this would look like:

proxmox.com. 22879 IN MX 100 mail.provider.tld.

In such a setup, your provider must accept mails for your domain and forward them to you. Please note that this is not advisable, because spam detection needs to be done by the backup MX server as well, and external servers provided by ISPs usually don’t do this.

However, you will never lose mails with such a setup, because the sending Mail Transport Agent (MTA) will simply deliver the mail to the backup server (mail.provider.tld), if the primary server (mail.proxmox.com) is not available.

|

|

Any reasonable mail server retries mail delivery if the target server is not available. Proxmox Mail Gateway stores mail and retries delivery for up to one week. Thus, you will not lose emails if your mail server is down, even if you run a single server setup. |

Load balancing with MX records

Using your ISP’s mail server is not always a good idea, because many ISPs do not use advanced spam prevention techniques, or do not filter spam at all. It is often better to run a second server yourself to avoid lower spam detection rates.

It’s quite simple to set up a high-performance, load-balanced mail cluster using MX records. You just need to define two MX records with the same priority. The rest of this section will provide a complete example.

First, you need to have at least two working Proxmox Mail Gateway servers (mail1.example.com and mail2.example.com), configured as a cluster (see section Cluster Administration below), with each having its own IP address. Let us assume the following DNS address records:

mail1.example.com. 22879 IN A 1.2.3.4 mail2.example.com. 22879 IN A 1.2.3.5

It is always a good idea to add reverse lookup entries (PTR records) for those hosts, as many email systems nowadays reject mails from hosts without valid PTR records. Then you need to define your MX records:

example.com. 22879 IN MX 10 mail1.example.com. example.com. 22879 IN MX 10 mail2.example.com.

This is all you need. Following this, you will receive mail on both hosts, load-balanced using round-robin scheduling. If one host fails, the other one is used.

Other ways

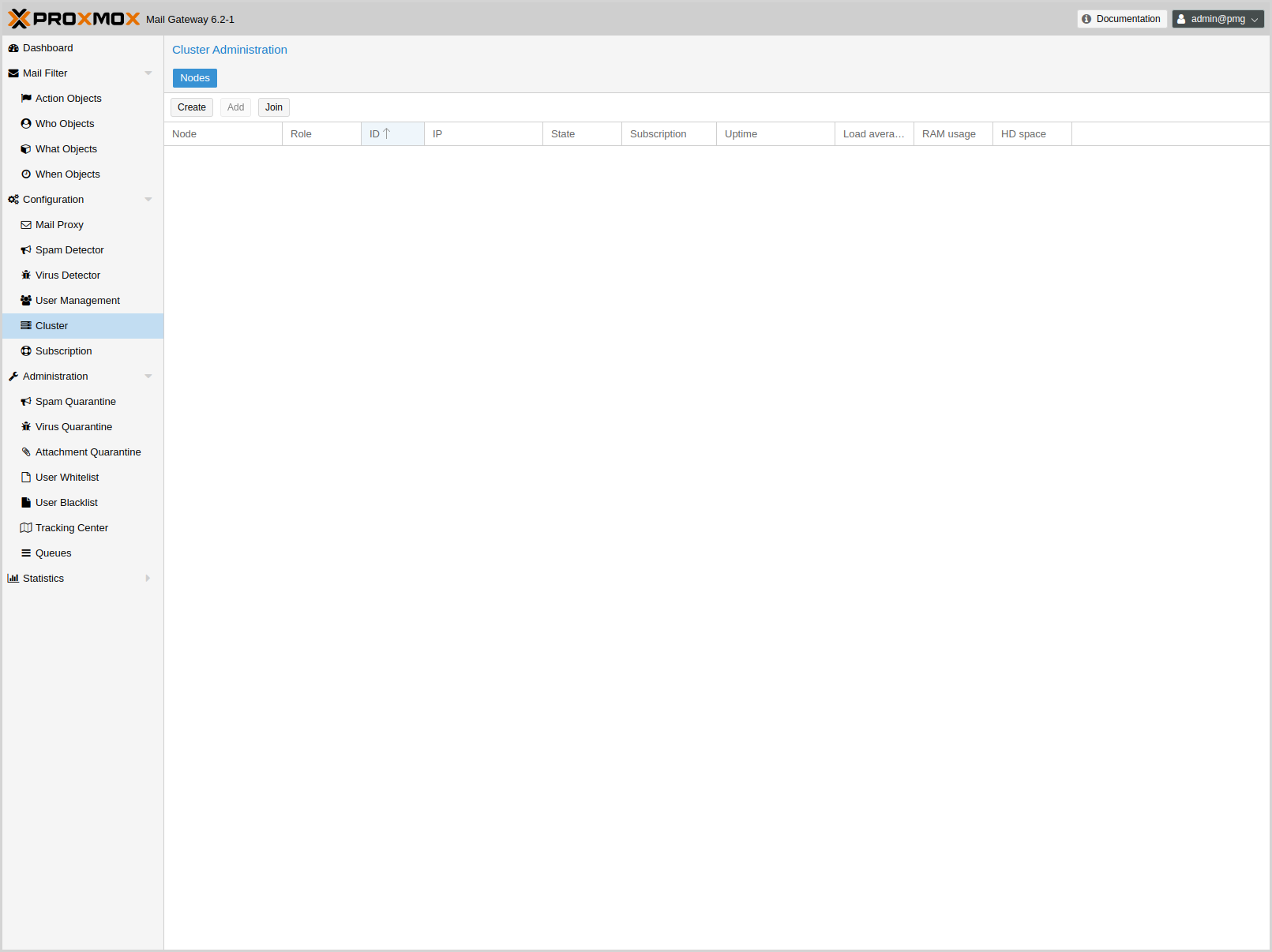

Cluster Administration

Cluster administration can be done from the GUI or by using the command-line utility pmgcm. The CLI tool is a bit more verbose, so we suggest to use that if you run into any problems.

|

|

Always set up the IP configuration, before adding a node to the cluster. IP address, network mask, gateway address and hostname can’t be changed later. |

Creating a Cluster

-

make sure you have the right IP configuration (IP/MASK/GATEWAY/HOSTNAME), because you cannot change that later

-

press the create button on the GUI, or run the cluster creation command:

pmgcm create

|

|

The node where you run the cluster create command will be the master node. |

Show Cluster Status

The GUI shows the status of all cluster nodes. You can also view this using the command-line tool:

pmgcm status --NAME(CID)--------------IPADDRESS----ROLE-STATE---------UPTIME---LOAD----MEM---DISK pmg5(1) 192.168.2.127 master A 1 day 21:18 0.30 80% 41%

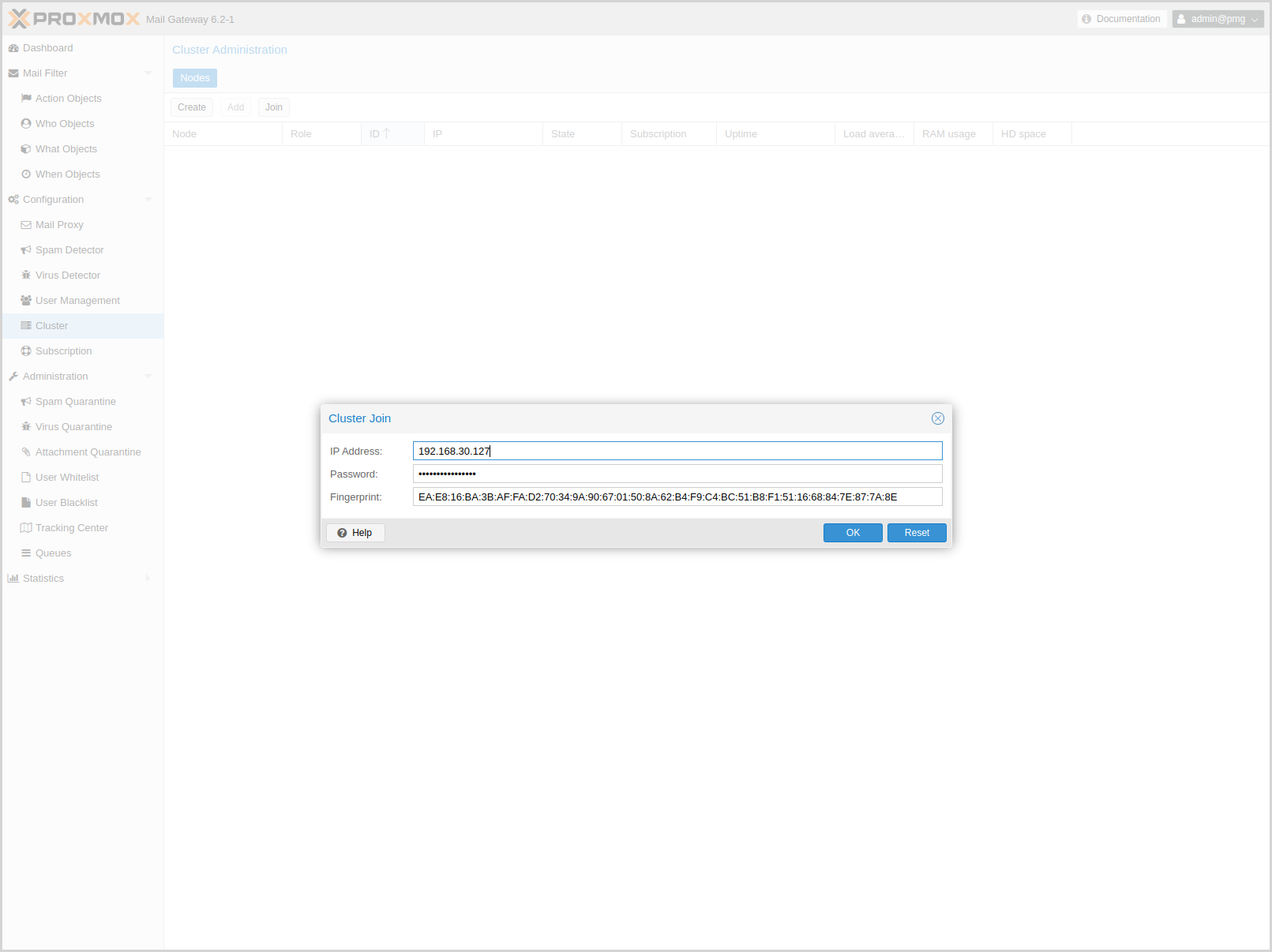

Adding Cluster Nodes

When you add a new node to a cluster (using join), all data on that node is destroyed. The whole database is initialized with the cluster data from the master.

-

make sure you have the right IP configuration

-

run the cluster join command (on the new node):

pmgcm join <master_ip>

You need to enter the root password of the master host, when asked for a password. When joining a cluster using the GUI, you also need to enter the fingerprint of the master node. You can get this information by pressing the Add button on the master node.

|

|

Joining a cluster with two-factor authentication enabled for the root user is not supported. Remove the second factor when joining the cluster. |

|

|

Node initialization deletes all existing databases, stops all services accessing the database and then restarts them. Therefore, do not add nodes which are already active and receive mail. |

Also note that joining a cluster can take several minutes, because the new node needs to synchronize all data from the master (although this is done in the background).

|

|

If you join a new node, existing quarantined items from the other nodes are not synchronized to the new node. |

Deleting Nodes

Please detach nodes from the cluster network, before removing them from the cluster configuration. Only then you should run the following command on the master node:

pmgcm delete <cid>

Parameter <cid> is the unique cluster node ID, as listed with pmgcm status.

Disaster Recovery

It is highly recommended to use redundant disks on all cluster nodes (RAID). So in almost any circumstance, you just need to replace the damaged hardware or disk. Proxmox Mail Gateway uses an asynchronous clustering algorithm, so you just need to reboot the repaired node, and everything will work again transparently.

The following scenarios only apply when you really lose the contents of the hard disk.

Single Node Failure

-

delete failed node on master

pmgcm delete <cid>

-

add (re-join) a new node

pmgcm join <master_ip>

Copyright and Disclaimer

Copyright © 2007-2026 Proxmox Server Solutions GmbH

This program is free software: you can redistribute it and/or modify it under the terms of the GNU Affero General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU Affero General Public License for more details.

You should have received a copy of the GNU Affero General Public License along with this program. If not, see https://www.gnu.org/licenses/